We have all read that DevOps transformation is cultural and organizational. At this point, I don’t think anyone would argue this fact. The trouble is if you start with tooling (as I and many others would recommend), you must be vigilant not to recreate the dysfunction you are trying to eliminate. Melvin Conway (Conway’s Law) stated it best when he said:

We have all read that DevOps transformation is cultural and organizational. At this point, I don’t think anyone would argue this fact. The trouble is if you start with tooling (as I and many others would recommend), you must be vigilant not to recreate the dysfunction you are trying to eliminate. Melvin Conway (Conway’s Law) stated it best when he said:

“Organizations which design systems … are constrained to produce designs which are copies of the communication structures of these organizations.”1

So how do you protect yourself or prevent yourself from codifying the old? What are some of the common tooling pitfalls that impede adoption or derail transformation?

Pitfall 1: Little/No Collaboration (single department or group).

This is the most common anti-pattern I encounter in the field and perfectly matches Conway’s Law mentioned above. Rather than work with “them”, we avoid collaboration. Infrastructure teams build infrastructure services and portals, developers implement build automation and dashboards, and QA team build test automation. After the investments are made, many leaders look in disbelief. Despite significant investments in tooling, adoption is low and IT performance hasn’t significantly improved. (To better understand why, I recommend researching Goldratt’s Theory of Constraints.)

Many customers start this way because their span-of-control/influence is limited to their respective department. It is easier to start within the confines of a single department rather than add complexity and possible conflict with other stakeholder groups. As such, these customers assemble a team and start building a piece of the tool chain, like build automation, server provisioning, automated testing, etc.. While this approach is effective at optimizing a department or step along the SDLC workflow, it is rarely effective at optimizing the overall productivity of the system and/or accelerating deployments.

For example, I recently developed a MVP tool chain for a customer that was responsible for build and release management but wasn’t positioned to collaborate with development, test, and infrastructure groups. In the end, the tool chain consisted of only a code repository, artifact repository, compiler, and deployment engine. It did not include unit test integration or code analysis and wasn’t linked to infrastructure provisioning system. While this tool chain did speed up his team ability to create deployable code, it couldn’t validate that the artifacts being created were any good, couldn’t provide real-time feedback back to DEV teams regarding code quality and functional completeness, and couldn’t verify that the packaged application was compatible with available infrastructure. Note that future stages of roadmap did expand on this early MVP but the example is still relevant. Without collaboration, the value of tool chain is limited; its ability to impact IT performance is negligible; and, the work completed is at risk of significant rework (even full rewrite) once an enterprise vision is established.

The remediation is too collaborate more through cross-functional design and build teams. In other words, work across departments, practice influencing others, and most importantly learn from others so that teams can greater awareness of the broader development and deployment pipeline. Reinforce collaboration through shared metrics focused on system throughput. That is, how many changes are successfully deployed to production, rather than on departmental metrics like system provisioned, production outages, code complete, etc.

Pitfall 2: Closed architecture (not easily extensible)

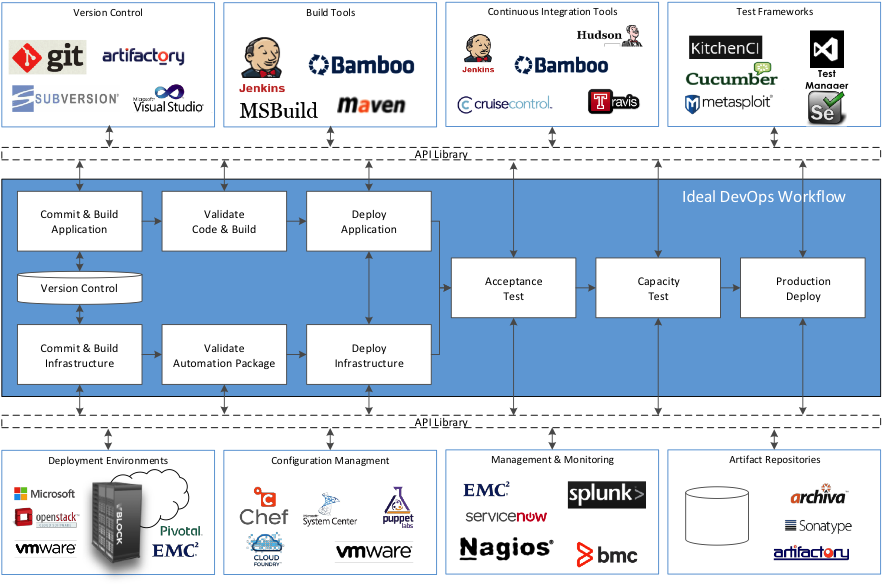

This is the ultimate paradox IMO. DevOps tool chains are built to handle the rapid introduction of application and infrastructure changes into an environment but many of these tool chains themselves are inflexible and unable to easily add/remove tools. When designing a tool chain, I urge customers to think globally, or think past the immediate problem statement, pilot application, or step in workflow. As your DevOps transformation gains steam, new tools are going to be added support non-conforming legacy workloads as well as new, cloud-native technologies like Docker or Cloud Foundry. To account for this, DevOps tools chains need to embrace the concepts and best practices inherent in service oriented architecture, like encapsulation, abstraction, and decoupling. By building a set of loosely coupled services, such as Unit Test or Create VM, that are linked to an encoded workflow and abstracted by an API framework, your tool chain becomes very flexible and adaptable as long as new tools have compatible APIs. Using this pattern, your tool chain will consist of a set of objects, parameters and policies that will define how and when to use a tool given a specific set of rules are met. In practice this approach does add complexity and requires a development contract for adding new tools but in exchange it offers significant flexibility and resiliency of your tool chain and investment.

The remediation is:

Pitfall 3: Cannot replicate on-demand

I love introducing (or re-introducing) customers to Chaos Monkey™. For those unfamiliar, Chaos Monkey™ is a utility that basically destroys a live, production server at random. It was developed to test the resiliency and response time of the infrastructure and automation system to failures. Most customers that I meet with think this concept is crazy — intentionally destroying a system just to make sure you can recover with little to impact to service. If you don’t have any confidence in your automation platform and MTTR metrics keep you up at night, I agree running Chaos Monkey™ is bananas (sorry, couldn’t resist).

Being able to replicate on-demand has two prerequisites, namely a known good state and automated build processes. Known good state is a specific version of an application and its corresponding configurations coupled with a specific version of an infrastructure and its configurations. ALL these artifacts are created and tested in the development (DEV) stage of the SDLC process and then reused in later stages of the SDLC for testing. Release candidates, or packaged artifacts, that successfully move through the SDLC process are promoted to Production (PROD). PROD releases trigger a new known good state to be defined. This then becomes the standard against which all new changes are tested.

The second pre-requisite is automated build and configuration process. These automated processes are needed to take bare metal servers, virtual machines, containers, and/or existing systems and convert them into working application environments. Integrated tooling enables each layer of the environment and the corresponding configurations to be systematically applied until the desire state (aka. known good state) is achieved. Tools like Puppet and Chef take this paradigm a step further and use the known good as a ‘declared state’ and manage the environment to maintain that ‘declared state’. Cloud native technologies, like Pivotal Cloud Foundry or Docker, shift this paradigm a bit and deliver a standardized version of a container as a service in which an application can run. Developers wanting to use these platforms write code that will comply with the usage contract of a given container. Regardless of what type of tooling your application requires, automating the end-to-end build process is key to replicating environments on-demand.

In order to remediate the lack of ability to replicate on demand, remember these two pre-requisites:

1. Version your infrastructure like the applications code base. By versioning infrastructure AND code, you can confidently like known good infrastructure configurations with known good and compatible application versions.

2. Build once and replicate deployments from known good packages. The infrastructure build and code packaging process should only occur once during the DEV stage. All subsequent phase/quality gates (including PROD) should be assembled using known good artifacts and deployment scripts tested repeatedly through the SDLC and deployment processes.

Wrapping up

While there are many other factors that can contribute to the overall success or failure of your DevOps transformation, paying careful attention to how you select tools and build continuous delivery tool chains will provide you with a foundation that can mature and scale as you transform the enterprise. If you are interested in learning more, click here.

1Conway, Melvin E. (April 1968), “How do Committees Invent?”, Datamation 14 (5): 28–31, retrieved 2015-04-10